We talk a lot about the high end detectors here and that’s usually what we focus on. As such, I don’t actually know all that much about the affordable options and so I’ve been curious how well the less expensive detectors in the ~$200 price range perform. How capable are those units, really? Are there some standout units that, if you’re looking to save some cash, can give you a ton of bang for your buck? I hear a lot how “Cobras suck” and yet I hear from owners that they’re getting saves and the detectors are nevertheless helping the avoid speeding tickets. Curious how much of a difference there really is, it’s time to put together another test to find out.

For this test, we’ve got 10 different detectors, 4 different radar guns, and 2 different courses. One course is really easy and both high end and low end detectors can give you plenty of time for a save. It’s an easy course with a long straightaway, but wasn’t illustrating the point I was wanting to show. The second course is a more difficult course. It has some trees and curves and as such, helps separate the different classes of detectors and clearly shows how much of a difference there really is between detectors. This is really what I was hoping to test and what this test is all about. Seeing how much of a difference there is between detectors in more challenging situations.

If you’d like to see videos of the opposite ends of the spectrum, here’s some comparison runs between the Redline and the Cobra. (It’s meant to be a comprehensive standalone video so it is about 24 min long.) We run both courses here, starting with the easy course against 33.8, run both detectors on the tough course against 33.8, then 35.5 (harder to detect), and finally we run just the Redline against K band in both Highway/TSR off mode as well as Auto/TSR on to see how much range drops if you change the filtering settings.

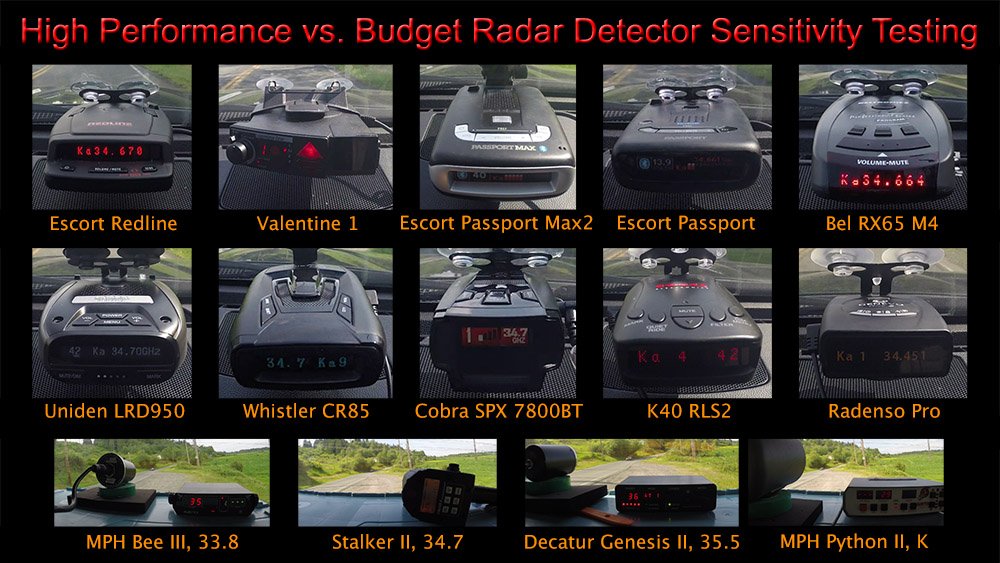

So now that you get the idea of what we’re looking to test, here’s our contenders:

Top end detectors setting a baseline:

Escort Redline BS/RDR: (retail $500, Amazon link)

Valentine 1 3.8945: (retail $400, Purchase direct)

Passport Max2 1.3: (retail $600, Amazon link)

Challengers:

Passport: (retail: $350, Amazon link)

RX65 M4: (discontinued, available online ~$130, Amazon link)

Uniden LRD950 1.35: (retail $400, online <$200, Amazon link, BRD link)

Whistler CR85: (retail $230, online $150, Amazon link)

K40 RLS2: (retail $400, Amazon link)

Cobra SPX 7800BT: (retail for $260, online $180, Amazon link)

Radenso Pro: (anticipated retail ~$489, Amazon link)

Radar guns:

MPH Bee III: 33.8

Stalker II: 34.7

Decatur Genesis II: 35.5

MPH Python II: K band

All detectors were set up for maximum sensitivity with the equivalent of 2/5/8 and RDR off, with the exception of the Radenso which I’ll get into in a bit. Highway mode, TSR off for K band.

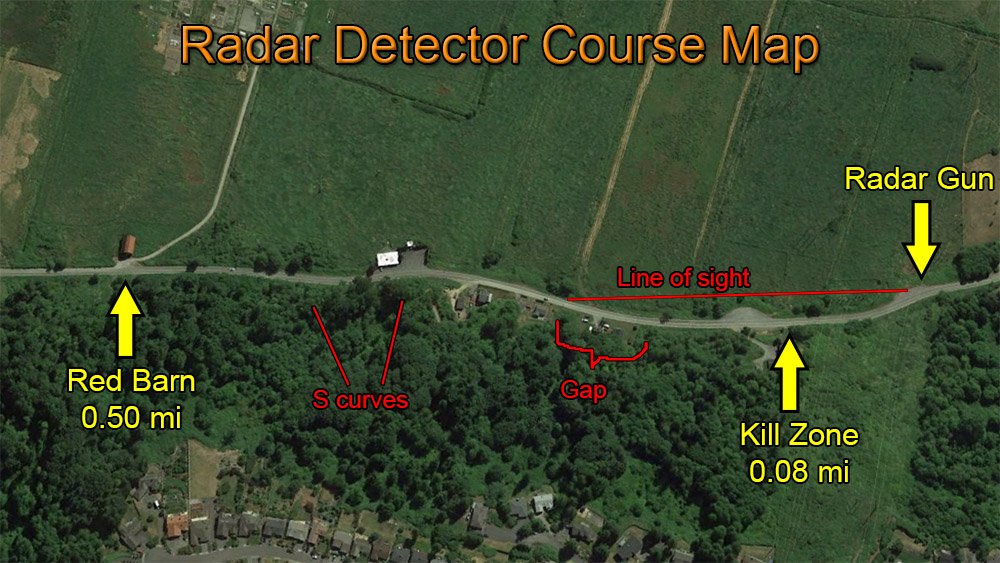

Radar Detector Course Info

Before we jump into the results, it’s important to understand the layout of the course.

The course length is about half a mile. Maximum detection range is at a red barn on the left side of the road. We then drive through some small S curves which help cut down on detection range. We then pass a gap in the trees where we have a visual line of sight of just under a quarter mile directly to the radar gun. Many detectors actually alert here. If they don’t, I consider that pretty bad if I can actually see the radar vehicle at relatively close range and the detector doesn’t alert. We want alerts at that point or farther away.

Here’s a video driving down the course, pointing out a few of the relevant areas.

You’ll notice that I’m running two cameras, one recording the detector, one recording the radar gun. When I get up to the radar car I quickly honk my horn so that I can make sure the two clips are perfectly in sync. This way I can see exactly how much time I have before the kill zone. I can also look back and see if there’s any traffic going in either direction during the runs and see if that had any impact on the results. Videos in both directions were shot for all runs.

So now that we have that established, let’s dive into the results. I’m going to show you the raw results first so you can see exactly what I saw, and then we’ll talk about analyzing and interpreting the results.

Results

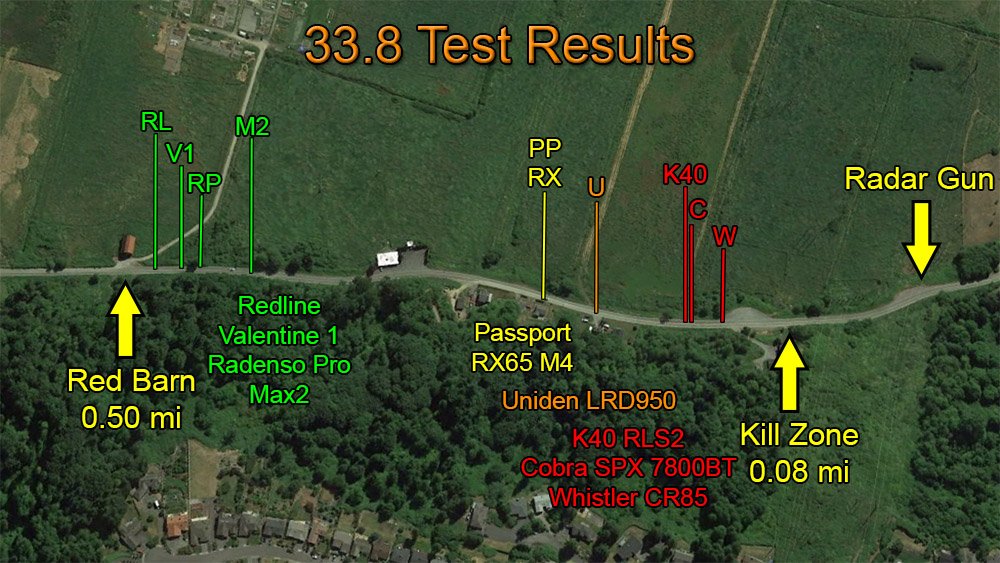

33.8:

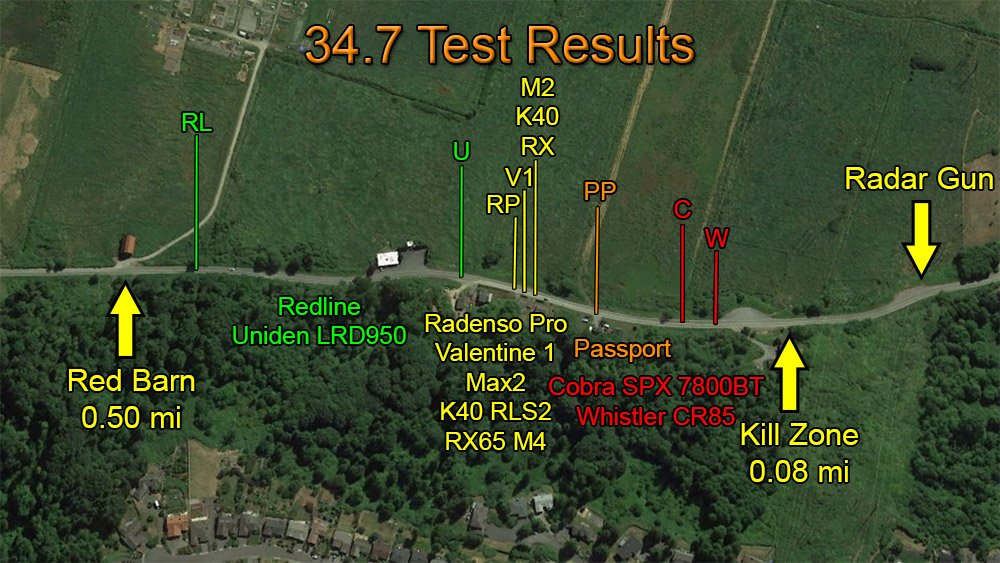

34.7:

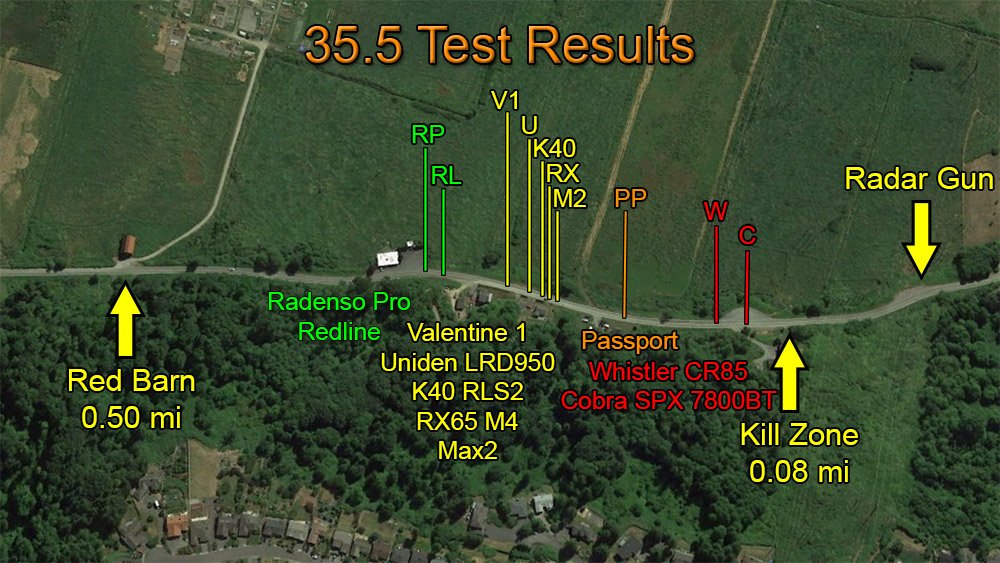

35.5:

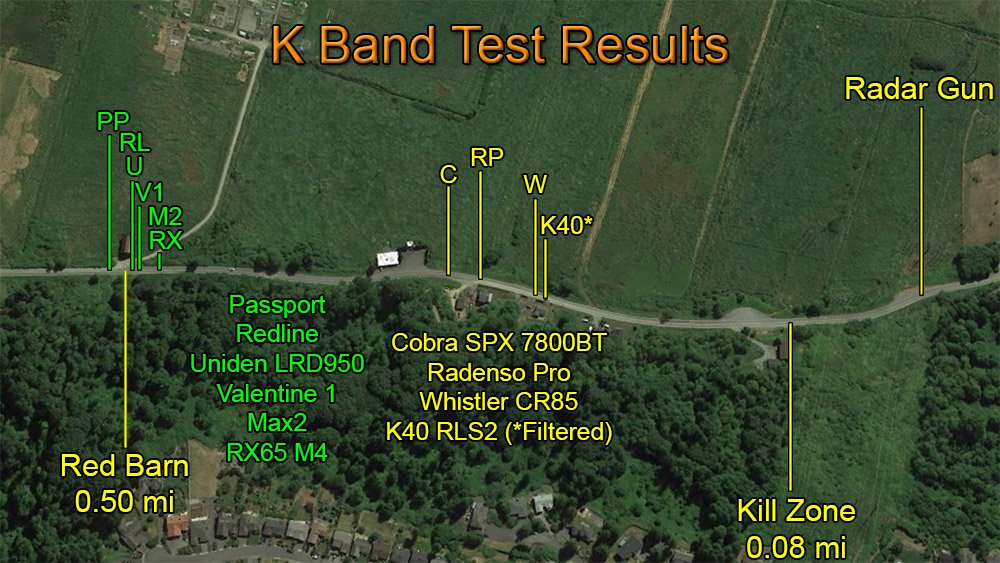

K Band:

So as you can see, we definitely have things broken up into clusters. The colors are somewhat arbitrary and detectors are grouped by clusters, but generally the yellow clusters are around that gap in the trees where we get line of sight to the radar vehicle. Green is before that gap which is good. Orange or red is if the detectors didn’t alert in that gap which is bad.

Interpreting these results

Okay so this part is important. I spent hours and hours and hours going over all the video, figuring out exactly where the detectors went off, and plotting them out down to the pixel. So in the interest of precision and accuracy, what you see on the map is reflective of how the detectors fared. That said, if a detector goes off 10 feet before another detector, is it actually 0.1% more sensitive? I wouldn’t say that.

I ran the detectors typically only one time, due to time constraints. Ideally I’d love to have 10 copies of each detector to account for sample variation, do 10 runs with each detector to see the consistency and weed out any outliers, have absolutely no traffic whatsoever (traffic was light fortunately, but did at times have an impact on the results), and so on. Heck, I’d love to even have not even a light breeze so that there’d be no movement in the branches and trees which could potentially block a radar signal or move and allow some to sneak through. I’d love to have ideal testing scenarios, but even doing as much as I can, realistically there’s only so much I can do.

I did do a few different runs multiple times for different reasons and I did see fairly consistent results most of the time. However, if one detector happened to go off a second or two earlier the next run and another detector went off a second or two later the next run, detectors could easily swap positions and so just because you see one detector just before another, there really is some fudge factor that we’re simply going to have to account for.

The way I would recommend looking at the results is to look at the trends. If one detector consistently does well, keep that in mind. If one detector consistently does poorly, keep that in mind.

Most of the results were what we expected. Things like the Redline typically leading the pack. V1 and Max2 generally behind somewhere. Other detectors in that area or below.

Some detectors had some interesting results, like the Passport actually winning out for the longest detection of the day on K band. It was the only detector to ever alert before the red barn. Would it have done this repeatedly if I did a bunch of runs? Maybe, maybe not.

The Cobra didn’t do too hot overall, but that’s to be expected. Unfortunately the Whistler was the worst performing detector out of the group. Not sure if it’s that the Cobra got a little better, but it’s a shame to see the Whistler get beat out by a Cobra. That said, the results are the results.

There were some odd things that happened like I got a freakishly long detection with the V1 on 35.5. Notice that range for everything is reduced there. When I got that long detection on the V1, I was driving towards the radar car and joehemi gets on the walkie talkie and radios in that a car and a bike had actually pulled into the gravel pullout and parked behind them. I’m positive this impacted the results. I reran the test because of this and the V1 dropped back down to normal like we expected.

Setting up the 35.5 course initially, I did a few passes with the Redline to figure out why detection range was so short. It looks like 35.5 is just harder to detect. However, I did find that the results were fairly consistent. That said, when I was making my actual test runs, the Redline got a nice long detection on 35.5. Unfortunately, halfway through the 35.5 testing I hear a beep coming from down in the passenger seat where all my other RD’s were. The Radenso Pro went off and I realized that it had been running the entire time I was testing 35.5. Because of this, I had to chuck the results and restart 35.5 testing. This time, every detector actually did the same or even a little bit worse. Very odd… Maybe that detector causes interference in a good way? ![]() Maybe there is some inconsistency in the results that I can’t account for. I’ve gone over the videos many many times and while there are some variables I can account for, there’s some I can’t which lead to a variability in the precision and consistency of the results.

Maybe there is some inconsistency in the results that I can’t account for. I’ve gone over the videos many many times and while there are some variables I can account for, there’s some I can’t which lead to a variability in the precision and consistency of the results.

At the end of the day, I think there’s just some variability that, try as we might, we simply can’t account for. For example, look at the Oct 2014 RD test results that the MWTC of ECCTG put on. You’ll see some interesting stuff like K band testing where Redline#1 was at the top of the pack and Redline #2 is towards the bottom. The Max2 smoked everyone on 34.7, but was near the bottom of the pack on 35.5 where it got less than half the range of the top end performers. Redline #2 which was near the bottom on K band tops the list on 35.5.

So what I’m saying is weird stuff happens. No one test will tell you everything and give you the big picture. Multiple tests, a variety of courses, different backyard testers, different radar guns, multiple copies of detectors, different terrains and traffic conditions, heck even testing in clear conditions and in the rain. I think they all work together and when you look at the combination of test results all put together, you get a feel for the big picture. There’s gonna be some weird things that happen that, try as we might, we may not be able to explain or account for. That’s why I think it’s better to look at lots and lots of tests and notice the overall trends and patterns. That’s really the best way of approaching this, IMHO, not looking at any one test and trying to derive the ultimate answer from just that.

Speaking of multiple tests, I’m going to work on a post next talking about K band filtering. I did a lot of testing on K band to see how the different filtering options affected the performance of these detectors. Since this post is already fairly long and that’s a related but different topic, I’ll do another thread for that test. Edit: Here it is. https://www.rdforum.org/showthread.php?t=44374

Summary of each detector’s performance

So with all that said, let’s go over how each detector fared overall:

Escort Redline: As expected, the Redline generally led the field and was the leader for 33.8 and 34.7. It came in second on 35.5 to the Radenso Pro (impressive by the new detector!) but it also did even better than the Radenso on one of the earlier 35.5 runs that I had to throw away due to interference. It was also at the top of the pack on K band, being matched by the Uniden and actually beat by the Passport. Not sure what that was about, but hey…

Valentine 1: Consistently did very well. While it didn’t win out any test individually, in the clusters it was in it generally did very well. For example, if you look at the gap where we had line of sight, notice that it alerted towards the front of the gap. Some of the other detectors took longer to alert once they could see the signal. This was a definite trend with the V1.

Passport Max2: Most expensive detector in the group. Generally performed similarly to the V1, yet would alert a bit afterwards. Notice how it’s towards the rear of the pack in its respective clusters. This was an interesting trend and is consistent with my previous testing. I’ve never been able to confirm the claim that it has the longest range on all bands against all guns. It also had some interesting results with Auto and TSR which I’ll get into in the other thread on that topic.

Passport: So given that it’s the top performing M4 with BS/RDR, how much better would it do compared to the RX65 M4, an earlier version of the M4 platform? Well while it holds the honors of the longest detection of the day, it also performed in the same ballpark as the RX65 (makes sense), and yet it would sometimes alert behind the RX65 and even the other detectors in the pack. Look at the 34.7 and 35.5 results, for example. Very strange.

RX65 M4: Given the inexpensive price of this unit, it did very well. It’s one of my top picks for an affordable option that still gets reasonable performance.

Uniden LRD950: It didn’t always do well, given some of my previous tests, but it still holds its own and compares favorably with some of the other options out there. Given that it can be had now at $200 and has other features like GPS lockouts and low speed muting, it’s an interesting option to consider. It was a little lacking on 33.8, but did well on 34.7, beating the V1 and Max2, and it did just fine on 35.5. It also did well on K band and I found out something incredibly interesting about its K band filtering performance, which again I’ll address in the other thread.

Whistler CR85: Unfortunately, this was the worst performing detector of the group, even getting beat out by Cobra. The Whistler consistently did poorly. It came in last place for 33.8 and 34.7. It was second to last in 35.5 and again in K band. This is consistent with my previous testing I’ve done with the unit.

K40 RLS2: While this unit has an outstanding K band blind spot monitoring filter, its performance is pretty lacking and it’s something that even K40 admits. They aim for quietness here, not sensitivity. It tended to trend towards the bottom of the pack and its $400 price tag is not backed up by its performance. It’s also the only detector that does NOT let you turn off its K band filtering options and so while the other detectors were tested with K band filtering turned off, this one was tested with it turned on.

Cobra SPX 7800BT: Always glad to get a Cobra into the mix so we can see how well it fares. I had kinda assumed it would be the worst performer in the pack, because Cobra, and it’s why I used it in my video comparing high end and low end detectors, but it actually did fairly decently on K band and generally beat out the Whistler. The thing falses to 35.5 a lot in practice when you encounter K band and given that its performance is terrible on 35.5, it definitely is a detector you want to avoid if 35.5 is used in your area. If you like the detector’s bells and whistles, the Uniden is probably a better pick. That antenna platform is used in Cobra’s more affordable detectors too so the performance will be the same as the other SPX series detectors, so if a person wants a less expensive unit, the RX65 M4 is probably the way to go.

Radenso Pro: This was an interesting one to test. Here’s the full discussion on this new detector. I found out about it 2 days before the test and had it overnighted to me since I was already gonna be doing this test anyways so it was a late entrant. It is a very solid performer on Ka band! It generally runs at the top of the pack and even beat out the Redline on 35.5. Very impressive performance. Actually, it’s even more impressive considering it was being run with band segmentation turned off. Oddly enough, the unit incorrectly registers Ka band signals too low and if you turn segmentation on, it won’t alert to legit Ka band signals. That’s why I ran it turned off. If they fix this issue and segmentation actually improves performance, wow. On K band, however, it was really nothing special. Bottom of the pack performance, getting beat out by the Cobra. That’s never a claim that you wanna make. This is something that they’re addressing and I’m surprised to see that this is the case at all, especially since this was originally a European detector where K band detection is so important. Once they address its K band sensitivity, I’m curious to see how it fares.

Thank you!!

Finally, I wanna give a massive and huge thank you to everyone who helped out in this test. The majority of the equipment was supplied by other enthusiasts within the community, as well as some manufacturers and distributors. It’s 100% because of this amazing community that this test can even happen. In the interest of full disclosure, here’s who supplied each of the detectors:

Redline: @joehemi‘s personal copy

V1: My personal copy

Max2: My personal copy, provided by Escort originally for testing

Passport: @hiddencam‘s personal copy

RX65 M4: @kdo2milger‘s brand new personal copy

Uniden LRD950: @BestRadarDetectors‘ personal copy

Whistler CR85: @jdong‘s personal copy

K40 RLS2: Retail unit supplied by k40radar.com

Cobra SPX 7800BT: Retail unit supplied by @BestRadarDetectors for testing

Radenso Pro: First US production unit provided by the US distributor @Hügel66

Finally, a big thank you to @joehemi as well for helping find these courses and man the radar car during hours and hours and hours of testing. 🙂

Thank you for reading!

The full discussion from this test is available on RDF. https://www.rdforum.org/showthread.php?t=44373

If you’ve found this test helpful and you’d like to buy one of the detectors you see here, you can support me and continued testing by using any of the Amazon links below. 🙂

Escort Redline : (retail $500, Amazon link)

Valentine 1: (retail $400, Purchase direct)

Passport Max2: (retail $600, Amazon link)

Challengers:

Passport: (retail: $350, Amazon link)

RX65 M4: (discontinued, available online ~$130, Amazon link)

Uniden LRD950: (retail $400, online $200, Amazon link)

Whistler CR85: (retail $230, online $150, Amazon link)

K40 RLS2: (retail $400, Amazon link)

Cobra SPX 7800BT: (retail for $260, online $180, Amazon link)

Radenso Pro: (anticipated retail ~$489, Amazon link)

| This website contains affiliate links and I sometimes make commissions on purchases. All opinions are my own. I don’t do paid or sponsored reviews. Click here to read my affiliate disclosure. |

8 comments

Skip to comment form

I would be curious whether if you test with a different Whistler CR85 unit the results would be the same. I’ve done a fair amount of real-world use testing of the CR85 versus Passport Max, Beltronics GT-7 and the Cobra DSP 9200 BT and found its detection distance to be roughly comparable to the others. It’s a tad behind, but not as much as indicated in your tests, leading me to think something may be amiss with the unit you were using. That said, the CR85 certainly falses more than the others, and wouldn’t be my first choice in a detector. But for $150, it’s a pretty good deal (if it performs as it did in my testing).

Author

Interesting. I’ve seen a number of different tests and the Whistlers haven’t fared too well. Perhaps you have a better performing unit, who knows? Got a link to your results you can share? I’d love to see. 🙂

Apologies I didn’t get back to you sooner, I just saw the response notification. Here is a link to the comparison I did between the CR85 and Passport Max back in 2014: http://www.techlicious.com/review/escort-passport-max-versus-whistler-cr85/. I ran the two head-to-head over a number of road trips, in a variety of conditions. Detection performance was relatively similar, though the Passport Max was much better at not alerting to collision avoidance systems.

Radar Roy also tested the CR85 and judged it to be his top pick under $200. He doesn’t say much about how he tested it, but given his passion for Escort products, giving the nod to Whistler was notable: http://www.radarbusters.com/Whistler-CR85-Radar-Detector-p/whistler-cr85.htm.

I’ve continued to pull the Whistler CR85 in when testing other detectors, and it’s performance is consistently very good and remains my pick for under $200 options.

Also, it should be noted that the CR85 is identical internally to the older model Whistler Pro-78SE, which received positive reviews from a number of sites.

Author

Yeah in the testing we’ve done, neither are particularly stellar performers, but the Whistler is pretty bad in terms of range when terrain gets a bit challenging. There was even a test done where the detector only gave 1-2 sec of warning time, far less that competing detectors. I’d recommend checking it out. https://www.rdforum.org/showthread.php?t=38256

I totally get that everyone likes to save money, but if a detector can’t give you sufficient warning time against constant on, that’s unacceptable in my mind. Now that there’s better options out there like the Uniden which offer better performance and very good filtering, there’s no reason to go with a Whistler anymore.

I’ve heard nothing but fantastic things about Mike B, the guy behind Whistler, but unfortunately the products are simply not up to par these days.

In your testing, I’m assuming you ran the detectors individually, not both at the same time, correct?

The Max wasn’t all that great at blind spot filtering back then, and if it was better than the Whistler at the time, that’s not good for the Whistler. The Max has since been updated for better BSM filtering, but even still it lags behind the V1, Stinger, and Uniden detectors in effectiveness.

If you’re looking for an affordable detector, definitely take a look at the new Unidens. The DFR5 and DFR6 should be strong competitors in the $200 and less club.

I’m really wondering if Whistler may have a production quality issue as my results (and, apparently Radar Roy’s) differ so significantly from the other testing. In all my scenarios, the Whistler gave ample time to slow down before I was in radar range. (Funny that rdforum tested with an Audi, as that is the one model Whistlers are hypersensitive to. I jokingly referred to the CR85 as my “Audi detector”.)

FWIW, I’ve seen similar possible production variations from Escort, as well. My detectors (Passport Max and Max 2) have worked great. Yet if you check out the Escort forums and Amazon reviews, there are plenty of people complaining about poor/non- detection.

I did run the detectors at the same time (and yes, I’m aware of the potential issue with doing so) and independently. However, I didn’t find any notable difference when I had them both going or not, and there was no better way to test them over such a wide variety of real life scenarios. I would be curious to see whether anyone has done testing to see if performance truly is impacted by running multiple units (modern ones, not the really old models), or whether that is being used as a false issue to discourage head-to-head testing.

Have not had a chance to try out the Unidens yet. Will have to give them a shot.

Author

Yeah the Whistlers can provide several miles of range in easy situations such as flat open deserts. It’s when the terrain starts to get more challenging (hills, trees, curves, etc.) that it really starts to show its limits, so it really depends on the location you’re testing in. 😉 Around here I’m lucky to get half a mile with a Redline or Stinger! haha

There’s definitely been issues with the Max. Most notably cases splitting apart, bouncy mounts, continued 33.6xx falsing, and poor quality control overall. We’re also seeing more variability in the test results with the Max’s than any other detector for some reason. Most provide adequate performance. Every now and then there’s a standout showing. Sometimes there’s incredibly poor showings. Not sure why there’s more discrepancy with the Max’s than with other detectors. It might be due to Escort’s new digital scanning algorithms?

I get what you’re saying about running two detectors at the same time, and it’s true that’s much easier to test, but unless you’re using stealth detectors, you WILL get interference that will invalidate your results, unfortunately. That’s one of the main reasons we test one at a time under controlled conditions. If you’d like to read more about that, please read this: http://www.escortradarforum.com/forums/showthread.php?t=67

Let me know what you think of the Unidens. I think you’ll be pleasantly surprised. 😉

Whistlers are honestly terrible. Even the cheapest Cobra performs better than the CR85 or CR90 on range.

Hey Vortex, that video was hella informative even though you didn’t get to do everything you wanted. I appreciate all the hard work you put into this and helped me form an idea of how the RDs work and how well they work.